Load balancing @ Web Tier: Improve the response time for the web users.

Most(rather all) of the order capturing systems needs better response time for their user and this cannot be achieved by running an application on single server. Few months back we had to deal with the same problem and we used Big IP F5 external load balancer to get around this.

Things we need to consider & we considered while using external load balancer:

Things we need to consider & we considered while using external load balancer:1. Sticky Session: Most of the web-applications maintain some session state; either its as simple as user name or as complex as shopping cart. It’s important that the load balancer should forward the request to the same server every time the request is coming from the same user.

2. Health Monitor: It’s important that the load balancer should not forward the request to the failed or crashed servers. Also, it should start dispatching the request to server which comes back [It can actually be achieved by some kind of ping protocol.]

3. Load Balancing Algorithms: We used simple Round-Robin [with ratio configuration], but other algorithms like load based also exists, which dispatch the request to the server with lesser load than others.

All the above can be achieved by external load balancer, but in case a server fails we need to transfer the user sessions to other available servers. I am going to pass this problem for now [we'll talk about it in next post] as its a high availability problem not a load balancing one.

Load Balancing within the application [EJB & JMS]: Improve the processing within application.

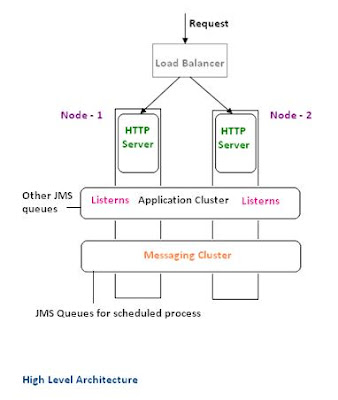

Consider a scenario when your application is running a scheduled process & has to process n (n is a very big number) events. To achieve parallelism within the J2EE applications we have to utilize JMS or Work Manager API's. Even if a scheduler picks up n numbers of orders to process and to process them efficiently put them to JMS queue, all the messages will be processed via the listeners local to that queue.

To achieve true parallelism when application is deployed in the cluster, JMS queues need to be remote to the listeners. So, to utilize all the servers within the cluster we created a separate "messaging cluster" to hold some of the JMS queues which will be remote to the listeners deployed in the application cluster. I soon realized that the orders which need immediate processing should be deployed per server [performance reasons: messages are processed quicker via local listeners.]

Another benefit for this architecture is high availability of messages in JMS queue. In case one of the nodes in a cluster fails, the JMS queue, being deployed in a cluster, does not loose messages. I will save high availability discussion for the next post.

EJB Load balancing : Typically, orders are processed in an asynchronous way [SOA architecture] e.g. orders captured by an order capturing system (OCS) is sent to a MQ which is then picked up by some kind of Order fulfillment system (OFS) for further processing. Between these two layers namely OCS & OFS there has to be an integration layer like ESB / WebMethods etc. Typically, integration layer picks up the message from MQ, do some transformations, add transit logs to DB and route the message to an appropriate service. Let's consider that the fulfillment system is running in a cluster & an EJB named FulfillmentService is deployed in that cluster. So, with the given scenario the load balancing opportunity is only with the integration layer which can choose EJB Skelton to process the request. Almost all the application containers expose the API's to achieve this via an EJB client; I have only tested this with Websphere 6.1 ND.

ADVICE: If you are using IBM Websphere, you better be using IBM JRE @ client side as well.

While writing a client for an EJB deployed in a cluster, we just have to provide the cluster aware initial context. The first call to EJB is intercepted by some kind of interceptor know as Location Service Daemon which gives the client enough information about the cluster environment in which the EJB is deployed. From this point, client becomes cluster aware client and takes the load balancing responsibilities as well.

//Context ctx = getInitialContext("iiop://FA-LT5824.us.farwaha.com:38014");

// for cluster

Context ctx = getInitialContext("corbaloc::FA-LT5824.us.farwaha.com:9811,:MA-LT5184.us.farwaha.com:9811");

if (ctx == null) {

System.out.println("Context received is null");

System.exit(0);

}

//Object obj = ctx.lookup("FulfillmentService");

//cluster aware lookup

Object obj = ctx.lookup("cell/clusters/ClusterEJB/FulfillmentService");

IFulfillmentServiceBeanHome home = (IFulfillmentServiceBeanHome) PortableRemoteObject.narrow(

obj, IFulfillmentServiceBeanHome.class);

IFulfillmentService bean = home.create();

bean.importOrder("testXML"); // just test

Associated distributed problems:

1. Concurrency: If there are two updates being handled by the two differed EJB's at the same time, which one should be persisted? Always use optimistic locking [check the version before saving.] Yes, Hibernate provides this facility but sometimes its over kill [may be I will write another post on optimized concurrency control.]

2. Race Condition: If there are two updates UD1 & UD2 and due to asynchronous processing UD2 is processed before UD1 is received. What will happen when system receives UD1? This is a typical sequence problem, so it’s a good idea to always assign a version id to all the updates & make sure the system never accepts the version lower than what it currently holds [This is only true when the system is accepting full updates, in partial update scenarios system may will loose important updates.]

** I have Tested this architecture only on IBM Websphere ND 6.1.17. I believe that basic concepts remain same and its much easier to achieve the same with BEA Weblogic or JBOSS App container.

No comments:

Post a Comment